Is Artificial Intelligence Really an Existential Threat to Humanity?

ARTIFICIAL INTELLIGENCE-AI, TECHNOLOGY, 17 Aug 2015

Edward Moore Geist – Bulletin of the Atomic Scientists

Superintelligence: Paths, Dangers, Strategies is an astonishing book with an alarming thesis: Intelligent machines are “quite possibly the most important and most daunting challenge humanity has ever faced.” In it, Oxford University philosopher Nick Bostrom, who has built his reputation on the study of “existential risk,” argues forcefully that artificial intelligence might be the most apocalyptic technology of all. With intellectual powers beyond human comprehension, he prognosticates, self-improving artificial intelligences could effortlessly enslave or destroy Homo sapiens if they so wished. While he expresses skepticism that such machines can be controlled, Bostrom claims that if we program the right “human-friendly” values into them, they will continue to uphold these virtues, no matter how powerful the machines become.

Superintelligence: Paths, Dangers, Strategies is an astonishing book with an alarming thesis: Intelligent machines are “quite possibly the most important and most daunting challenge humanity has ever faced.” In it, Oxford University philosopher Nick Bostrom, who has built his reputation on the study of “existential risk,” argues forcefully that artificial intelligence might be the most apocalyptic technology of all. With intellectual powers beyond human comprehension, he prognosticates, self-improving artificial intelligences could effortlessly enslave or destroy Homo sapiens if they so wished. While he expresses skepticism that such machines can be controlled, Bostrom claims that if we program the right “human-friendly” values into them, they will continue to uphold these virtues, no matter how powerful the machines become.

httpv://www.youtube.com/watch?v=V1eYniJ0Rnk

These views have found an eager audience. In August 2014, PayPal cofounder and electric car magnate Elon Musk tweeted “Worth reading Superintelligence by Bostrom. We need to be super careful with AI. Potentially more dangerous than nukes.” Bill Gates declared, “I agree with Elon Musk and some others on this and don’t understand why some people are not concerned.” More ominously, legendary astrophysicist Stephen Hawking concurred: “I think the development of full artificial intelligence could spell the end of the human race.” Proving his concern went beyond mere rhetoric, Musk donated $10 million to the Future of Life Institute “to support research aimed at keeping AI beneficial for humanity.”

Superintelligence is propounding a solution that will not work to a problem that probably does not exist, but Bostrom and Musk are right that now is the time to take the ethical and policy implications of artificial intelligence seriously. The extraordinary claim that machines can become so intelligent as to gain demonic powers requires extraordinary evidence, particularly since artificial intelligence (AI) researchers have struggled to create machines that show much evidence of intelligence at all. While these investigators’ ultimate goals have varied since the emergence of the discipline in the mid-1950s, the fundamental aim of AI has always been to create machines that demonstrate intelligent behavior, whether to better understand human cognition or to solve practical problems. Some AI researchers even tried to create the self-improving reasoning machines Bostrom fears. Through decades of bitter experience, however, they learned not only that creating intelligence is more difficult than they initially expected, but also that it grows increasingly harder the smarter one tries to become. Bostrom’s concept of “superintelligence,” which he defines as “any intellect that greatly exceeds the cognitive performance of humans in virtually all domains of interest,” builds upon similar discredited assumptions about the nature of thought that the pioneers of AI held decades ago. A summary of Bostrom’s arguments, contextualized in the history of artificial intelligence, demonstrates how this is so.

In the 1950s, the founders of the field of artificial intelligence assumed that the discovery of a few fundamental insights would make machines smarter than people within a few decades. By the 1980s, however, they discovered fundamental limitations that show that there will always be diminishing returns to additional processing power and data. Although these technical hurdles pose no barrier to the creation of human-level AI, they will likely forestall the sudden emergence of an unstoppable “superintelligence.”

The risks of self-improving intelligent machines are grossly exaggerated and ought not serve as a distraction from the existential risks we already face, especially given that the limited AI technology we already have is poised to make threats like those posed by nuclear weapons even more pressing than they currently are. Disturbingly, little or no technical progress beyond that demonstrated by self-driving cars is necessary for artificial intelligence to have potentially devastating, cascading economic, strategic, and political effects. While policymakers ought not lose sleep over the technically implausible menace of “superintelligence,” they have every reason to be worried about emerging AI applications such as the Defense Advanced Research Projects Agency’s submarine-hunting drones, which threaten to upend longstanding geostrategic assumptions in the near future. Unfortunately, Superintelligence offers little insight into how to confront these pressing challenges.

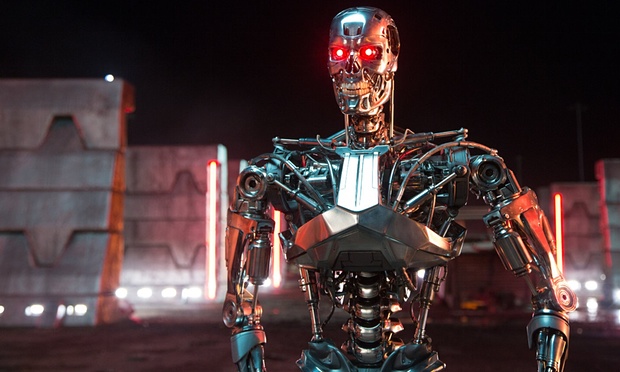

Over 1,000 leading experts in artificial intelligence [including Stephen Hawking] have signed an open letter calling for a ban on military AI development and autonomous weapons, as depicted within the Terminator sci-fi franchise. Photograph: Moviestore/REX Shutterstock/Moviestore/REX Shutterstock

Superintelligence is the culmination of intellectual trends that have been mounting for decades and have had a significant following in Silicon Valley for many years. Ray Kurzweil, director of engineering at Google, has long been an apostle for the notion that self-improving AI will bring about a technological revolution he calls the “singularity,” after which human existence will be so transformed as to be unrecognizable. In his rapturous vision, uploaded human minds will merge with artificial intelligence to live forever either in android bodies or as computer simulations. While Kurzweil sees AI as a panacea for all human problems, including mortality, others have viewed the prospect of self-improving intelligent machines with alarm. Charismatic autodidact Eliezer Yudkowsky co-founded the Machine Intelligence Research Institute in 2000 to “help humanity prepare for the moment when machine intelligence exceeded human intelligence.” This organization utilized financial support from wealthy Silicon Valley patrons to hire a full-time staff that has developed an increasingly pessimistic series of publications painting AI as a potential menace to the future of humanity. Although academics have largely dismissed both Kurzweil’s and Yudkowsky’s conceptions of “singulatarianism” until now, Bostrom’s Superintelligence has succeeded in bringing these ideas into mainstream intellectual discourse.

Bostrom’s arguments owe much to Yudkowsky’s writings about the dangers of “unfriendly” artificial intelligence—a debt he freely acknowledges. Bostrom is surprisingly agnostic about what form a “superintelligence” might take. He explores the prospect that genetic engineering, eugenics, or high-speed computer emulations of human brains, in addition to fully “artificial” intelligence, could produce entities much smarter than present-day humans. Bostrom’s postulated superintelligences are not merely somewhat faster-thinking or more knowledgeable than humans, but qualitatively superior agents with preternatural abilities. Concluding confidently that “machines will eventually greatly exceed biology in general intelligence,” he argues furthermore that it is “likely” that machines could recursively improve their own intelligence so rapidly as to rise from mere human-level intelligence to godlike power in an “intelligence explosion” lasting “minutes, hours, or days.” After this epic development, Bostrom envisions that a superintelligence would boast what he terms “superpowers” encompassing intelligence amplification, strategizing, social manipulation, hacking, technology research, and economic productivity. According to Superintelligence, the lucky beneficiary of an intelligence explosion would probably gain a “decisive strategic advantage” which it could then employ to form a “singleton,” defined as “a world order in which there is at the global level a single decision-making agency.” Rendered smart enough to foresee and prevent any human attempts to “pull the plug” by the “intelligence explosion,” machines could take over the world overnight.

In contrast to the vast majority of philosophical literature on artificial intelligence, which is concerned primarily with whether machines can really be “conscious” in the same sense humans are, Superintelligence rightly dismisses this issue as largely irrelevant to the possible hazards intelligent machines might pose to mankind. If anything, machines capable of conceiving and actualizing elaborate plans but lacking self-awareness could be far more dangerous than mechanical analogues of human minds. Crucial to Bostrom’s argument is his belief that even in the absence of conscious experiences, intelligent machines would still have and pursue goals, and he additionally propounds that “more or less any level of intelligence could in principle be combined with more or less any final goal.” In one particularly lurid example, Bostrom paints a picture of how a superintelligent, but otherwise unconscious machine might interpret a seemingly innocuous goal, such as “maximize the production of paperclips,” by converting the entire earth, as well as all additional matter it could access, into office supplies. Preposterous as this scenario might seem, the author is entirely serious about such potential counterintuitive consequences of an “intelligence explosion.”

As this example suggests, Bostrom believes that superintelligences will retain the same goals they began with, even after they have increased astronomically in intelligence. “Once unfriendly superintelligence exists,” he warns, “it would prevent us from replacing it or changing its preferences.” This assumption—that superintelligences will do whatever is necessary to maintain their “goal-content integrity”—undergirds his analysis of what, if anything, can be done to prevent artificial intelligence from destroying humanity. According to Bostrom, the solution to this challenge lies in building a value system into AIs that will remain human-friendly even after an intelligence explosion, but he is pessimistic about the feasibility of this goal. “In practice,” he warns, “the control problem … looks quite difficult,” but “it looks like we will only get one chance.”

Convinced that sufficient “intelligence” can overcome almost any obstacle, Bostrom acknowledges few limits on what artificial intelligences might accomplish. Engineering realities rarely enter into Bostrom’s analysis, and those that do contradict the thrust of his argument. He admits that the theoretically optimal intelligence, a “perfect Bayesian agent that makes probabilistically optimal use of available information,” will forever remain “unattainable because it is too computationally demanding to be implemented in any physical computer.” Yet Bostrom’s postulated “superintelligences” seem uncomfortably close to this ideal. The author offers few hints of how machine superintelligences would circumvent the computational barriers that render the perfect Bayesian agent impossible, other than promises that the advantages of artificial components relative to human brains will somehow save the day. But over the course of 60 years of attempts to create thinking machines, AI researchers have come to the realization that there is far more to intelligence than simply deploying a faster mechanical alternative to neurons. In fact, the history of artificial intelligence suggests that Bostrom’s “superintelligence” is a practical impossibility.

The General Problem Solver: “A Particularly Stupid Program for Solving Puzzles”

The dream of creating machines that think dates back to ancient times, but the invention of digital computers in the middle of the 20th century suddenly made it look attainable. The 17th century German polymath Gottfried Leibniz sought to create a universal language from symbolic logic along with a calculus of reasoning for manipulating those symbols; in his foundational 1947 work Cybernetics, MIT mathematician Norbert Wiener noted approvingly that “just as the calculus of arithmetic lends itself to a mechanization progressing through the abacus and the desk computing machine to the ultra-rapid computing machines of the present day, so the calculus ratiocinator of Leibniz contains the germs of the machina ratiocinatrix, the reasoning machine.” By the second half of the 1950s, artificial intelligence pioneers claimed to have already created such machines.

In 1957, future Nobel laureate Herbert A. Simon made a speech declaring that the age of intelligent machines had already dawned. “It is not my aim to surprise or shock you—if indeed that were possible in an age of nuclear fission and prospective interplanetary travel,” he intoned. “But the simplest way I can summarize the situation is to say that there are now in the world machines that think, that learn, and that create. Moreover, their ability to do these things is going to increase rapidly until in a visible future the range of problems they can handle will be coextensive with the range to which the human mind has been applied.” Given the “speed with which research in this field is progressing,” Simon beseeched that humanity needed to engage in some serious soul-searching: “The revolution in heuristic problem solving will force man to consider his role in a world in which his intellectual power and speed are outstripped by the intelligence of machines.”

Simon’s astonishing pronouncement was more than mere bluster, for in collaboration with RAND researcher Allen Newell, he was hard at work implementing the General Problem Solver—a computer program they hoped would begin making superhuman machine intelligence a reality. Implementing Aristotle’s notion of means-ends analysis algorithmically, the program sought to minimize the distance from an initial state to the desired goal according to rules provided by the user. The discovery of a few powerful inference mechanisms like those embodied by the General Problem Solver, they hoped, would be the breakthrough that enabled the creation of machines boasting greater-than-human intelligence.

Astonishing as the hubris and naïveté of the pioneering artificial intelligence researchers appear in hindsight, the considerable success of their earliest experiments fueled their overconfidence. Starting from literally nothing, every toy example coaxed out of the crude computers of the time looked like, and really was, a triumph. At the beginning of the 1950s, skeptics scoffed at the notion that computers would ever play chess at all, much less well—yet by the time Simon gave his speech, programs had been developed to play chess and checkers, translate sentences from Russian to English, and even, in the case of Simon and Newell’s “Logic Theorist,” prove mathematical theorems. At this astronomical rate of progress, it seemed like what John McCarthy dubbed “artificial intelligence” in 1956 might achieve spectacular results in the not-too-distant future. Simon and Newell certainly thought so, predicting confidently that by 1967 “a digital computer will be the world’s chess champion, unless the rules bar it from competition”—a milestone a computer passed only in the late 1990s—and one of its brethren would “discover and prove an important new mathematical theorem.”

Much to their chagrin, Simon and Newell discovered that the General Problem Solver was not the breakthrough they had envisioned—even though it could, as promised, solve any fully specified symbolic problem. With the right inputs and enough computing resources, the General Problem Solver could solve logic puzzles or prove geometric theorems. Simon and Newell even attempted to program it to improve itself—almost certainly the first attempt to create a self-improving reasoning machine. But for all its generality, the General Problem Solver turned out to be quite bad at solving practical problems—as it turned out, most real-world problems were problems precisely because they were not fully specified—and it became an object of mockery for later AI researchers. In 1976 Yale University professor Drew McDermott dismissed Simon and Newell’s creation as “a particularly stupid program for solving puzzles.” Lamenting that it had “caused everybody a lot of needless excitement and distraction,” McDermott suggested that it “should have been called LFGNS—‘Local Feature-Guided Network Searcher.’”

A Mistaken Conflation of Inference with Intelligence

The failure of programs like the General Problem Solver forced the field of artificial intelligence to accept that its early assumptions about the nature of intelligence had been mistaken. Stanford University’s Edward Feigenbaum noted in the 1970s that “[f]or a long time AI focused its attention almost exclusively on the development of clever inference methods,” only to discover that “the power of its systems does not reside in the inference method.” Not only did powerful inference mechanisms offer little advantage, they learned that “almost any inference method will do,” as “the power resides in the knowledge.” This unwanted discovery inspired a massive reorientation of AI research toward “knowledge-based reasoning” during the 1970s. It also poses substantial obstacles to the kind of “intelligence explosion” Bostrom fears, since it implies that machines could not become “superintelligent” by refining their inference algorithms.

Bostrom’s descriptions of how machines might rapidly improve their intelligence make it clear that he does not appreciate that the knowledge possessed by reasoning programs is much more important than how those programs work. Asserting that “even without any designated knowledge base, a sufficiently superior mind might be able to learn much by simply introspecting on the workings of its own psyche,” he muses that “perhaps a superintelligence could even deduce much about the likely properties of the world a priori (combining logical inference with a probability prior biased towards simpler worlds, and a few elementary facts implied by the superintelligence’s existence as a reasoning system).”

Bostrom’s mistaken conflation of inference mechanisms with intelligence is also apparent in his colorful descriptions of how intelligent machines might annihilate humanity. Simply depriving AIs of information about the world is not adequate to render them safe, he claims, as they might be able to accomplish such feats as solving extremely complex problems in physical science without the need to carry out real-world experiments. In a scenario borrowed from Yudkowsky, Bostrom posits that a superintelligence might “crack the protein folding problem” and then manipulate a gullible human into mixing mail-ordered synthesized proteins “in a specified environment” to create “a very primitive ‘wet’ nanosystem, which, ribosome-like, is capable of accepting external instructions; perhaps patterned acoustic vibrations delivered by a speaker attached to the beaker.” It could then employ this system to bootstrap increasingly sophisticated nanotechnologies, and “at a pre-set time, nanofactories producing nerve gas or target-seeking mosquito-like robots might then burgeon forth simultaneously from every square meter of the globe (although more effective ways of killing could probably be devised by a machine with the technology research superpower).” This scenario doesn’t just strain a reader’s credulity; it also implies a fanciful understanding of the nature of technological development in which “genius” can somehow substitute for hard work and countless intermediate failures. In the real world, the “lone genius inventor” is a myth; even smarter-than-human AIs could never escape the tedium of an iterative research and development process.

A Misguided Approach to the Control Problem

The findings of artificial intelligence researchers bode ill for Bostrom’s recommendations for how to prevent superintelligent machines from determining the fate of mankind. The second half of Superintelligence is devoted to strategies for approaching what Bostrom terms the “control problem.” While creating economic or ecological incentives for artificial intelligences to be friendly toward humanity might seem like obvious ways to keep AI under control, Bostrom has little faith in them; he believes the machines will be powerful enough to subvert these obstacles if they want. Dismissing “capability control” as “at best, a temporary and auxiliary measure,” he focuses the bulk of his analysis on “giving the AI a final goal that makes it easier to control.” Although Bostrom acknowledges that formulating an appropriate goal is likely to be extremely challenging, he is confident that intelligent machines will aggressively protect their “goal content integrity” no matter how powerful they become—an idea he appears to have borrowed from AI theorist Stephen Omohundro. Bostrom devotes several chapters to how to specify goals that can be incorporated into “seed AIs,” so they will protect human interests once they become superintelligent.

If machines are somehow able to develop the kind of godlike superintelligence Bostrom envisions, artificial intelligence researchers have learned the hard way that the nature of reason itself will work against this plan to solve the “control problem.” The failure of early AI programs such as the General Problem Solver to deal with real-world problems resulted in considerable part from their inability to redefine their internal problem representation; if their designers failed to provide an efficient way to represent the problem in the first place, the programs usually choked.

By the early 1970s, it became apparent that the solution to this challenge probably lay in drawing upon domain-specific knowledge to develop higher-level, conceptual representations of problems. Empowered with higher-level concepts, the problem space could be radically reduced; the AI programs need consider only things that “really mattered.” This process, however, was tantamount to inventing a new symbolic language and translating the original problem into it. But due to the inherent undecideability of logical reasoning, it is a mathematical impossibility to ensure that these translations truly “mean” the same thing as the original. AI researchers in the 1970s noticed this phenomenon, but were too concerned with getting their programs to work to particularly care.

The culmination of 1970s investigations into knowledge-based reasoning, Douglas Lenat’s heuristic learning program EURISKO remains justifiably famous for repeatedly humiliating its human opponents. In contrast to the General Problem Solver, which was crippled by its internal rigidity, EURISKO was designed to utilize Lenat’s Representation Language Language—a system of knowledge representation that could modify itself to add new concepts, extend or modify existing ones, or delete them if it deemed them superfluous. This ability extended even to the rules for how to discover new rules, so EURISKO could invent new ways to be creative.

To test his creation, Lenat decided to compete in a wargame called the Traveller Trillion Credit Squadron (TCS). Each player received a trillion “credits” to build a fleet of futuristic warships that they then pitted against other players’ fleets. Never having played the game himself, or even seen it played, Lenat painstakingly added domain knowledge from the TCS rules to EURISKO, which then tested its own modifications of these concepts in simulated fleet encounters. When experienced players first saw EURISKO’s fleet at the national tournament in 1981, they laughed at the seemingly preposterous assortment of ships the program had created. The mockery stopped, however, when it swiftly trounced all of them. EURISKO had identified a counterintuitive synergy of loopholes in the rules that made its defense unbeatable and became ranking player in the United States. Furious, the TCS organizers modified the rules for the 1982 tournament—only to have the program discover a totally different offense-dominated strategy to circumvent these and take first prize again.

Impressive as this feat was, EURISKO could not have achieved it without human assistance because while it could invent novel solutions, most of its original ideas were stupid, and it lacked an effective means of determining which ones were not. Devoid of any knowledge beyond what Lenat originally provided, EURISKO could not recognize the pointlessness of a new idea except by exhaustive testing in simulated games. The program required human feedback to provide its new concepts with human-readable labels and to weed out futile lines of investigation. Lenat wrote that “the final crediting of the win should be about 60/40% Lenat/EURISKO, though the significant point here is that neither party could have won alone.” More worrying, due to its ability to modify itself, the program required supervision to prevent pathological changes to its control structure. Eventually this problem compelled Lenat to limit EURISKO’s capacity for self-modification.

The case of EURISKO and other knowledge-based reasoning programs indicates that even superintelligent machines would struggle to guard their “goal-content integrity” and increase their intelligence simultaneously. Obviously, any superintelligence would grossly outstrip humans in its capacity to invent new abstractions and reconceptualize problems. The intellectual advantages of inventing new higher-level concepts are so immense that it seems inevitable that any human-level artificial intelligence will do so. But it is impossible to do this without risking changing the meaning of its goals, even in the course of ordinary reasoning. As a consequence, actual artificial intelligences would probably experience rapid goal mutation, likely into some sort of analogue of the biological imperatives to survive and reproduce (although these might take counterintuitive forms for a machine). The likelihood of goal mutation is a showstopper for Bostrom’s preferred schemes to keep AI “friendly,” including for systems of sub-human or near-human intelligence that are far more technically plausible than the godlike entities postulated in his book.

The Obstacles to Superintelligence

Emboldened by the success of programs like EURISKO, in the 1980s artificial intelligence researchers devoted great effort to the development of knowledge-based reasoning—only to discover fundamental limitations that increase the obstacles to the creation of superintelligent machines. Google Director of Research Peter Norvig later recounted that the field of knowledge representation struggled to find “a good trade-off between expressiveness and efficiency.” Frustratingly, it turned out that the elusiveness of such a balance did not result from a failure of human engineering insight; by the end of the 1980s mathematical analyses emerged showing “that even seemingly trivial [knowledge representation] languages were intractable—in the worst case, it would take an exponential amount of time to answer a simple query.” This means that machines would have a hard time becoming superintelligent simply by adding more knowledge: They might be able to know far more than humans, but exploiting that knowledge would take longer and longer as the amount of knowledge they reasoned with increased. As Norvig concluded, “No amount of knowledge can solve an intractable problem in the worst case.”

Beginning in the late 1980s, the type of “symbolic” AI research exemplified by the General Problem Solver and EURISKO was increasingly eclipsed by a “connectionist” approach emphasizing neural networks. Although overoptimistic expectations led to a wave of disillusionment with this technology in the 1990s, during the 2000s more powerful computers, ever-increasing amounts of data, and improved algorithms combined with it to make artificial intelligence applications such as self-driving cars a reality. In the last few years, machines exploiting these advances have stoked apprehensions by demonstrating superhuman performance on tasks they had not been programmed to perform. For instance, Google DeepMind utilized a technique called “deep learning,” which combines neural networks in multiple layers, to play a variety of Atari 2600 games. Starting without any knowledge of how the games were supposed to be played, the program taught itself how to play some of them in an aggressive style no human could match. Videos of this feat helped convince industry leaders such as Musk and scientists such as Hawking that unstoppable AI might be not just the stuff of cinematic nightmare.

But while advanced machine learning technologies can be a terrible force for ill when applied to weapons systems or mass surveillance, instead of playing video games, this is a qualitatively different problem than machines that reason well enough to improve themselves exponentially. Neural networks can create highly efficient implicit systems of knowledge representation, but these are still subject to the tradeoff between expressiveness and efficiency identified in the 1980s. Furthermore, while these technologies have demonstrated astonishing results in areas at which older techniques failed badly—such as machine vision— they have yet to demonstrate the same sort of reasoning as symbolic AI programs such as EURISKO. Due to its preoccupation with the dubious prospect of superintelligence, resources spent on the particular research program Bostrom proposes to study “AI safety” would be better expended reducing the existential risks we already face.

For all its entertainment value as a philosophical exercise, Bostrom’s concept of superintelligence is mostly a distraction from the very real ethical and policy challenges posed by ongoing advances in artificial intelligence. Although it has failed so far to realize the dream of intelligent machines, artificial intelligence has been one of the greatest intellectual adventures of the last 60 years. In their quest to understand minds by trying to build them, artificial intelligence researchers have learned a tremendous amount about what intelligence is not. Unfortunately, one of their major findings is that humans resort to fallible heuristics to address many problems because even the most powerful physically attainable computers could not solve them in a reasonable amount of time. As the authors of a 1993 textbook about problem-solving programs noted, “intelligence is possible because Nature is kind,” but “the ubiquity of exponential problems makes it seem that Nature is not overly generous.” As a consequence, both the peril and the promise of artificial intelligence have been greatly exaggerated.

But if artificial intelligence might not be tantamount to “summoning the demon” (as Elon Musk colorfully described it), AI-enhanced technologies might still be extremely dangerous due to their potential for amplifying human stupidity. The AIs of the foreseeable future need not think or create to sow mass unemployment, or enable new weapons technologies that undermine precarious strategic balances. Nor does artificial intelligence need to be smarter than humans to threaten our survival—all it needs to do is make the technologies behind familiar 20th-century existential threats faster, cheaper, and more deadly.

_______________________________

Edward Moore Geist is a MacArthur Nuclear Security Fellow at Stanford University’s Center for International Security and Cooperation (CISAC). Previously a Stanton Nuclear Security Fellow at the RAND Corporation, he received his doctorate in history from the University of North Carolina in 2013.

Go to Original – thebulletin.org

DISCLAIMER: The statements, views and opinions expressed in pieces republished here are solely those of the authors and do not necessarily represent those of TMS. In accordance with title 17 U.S.C. section 107, this material is distributed without profit to those who have expressed a prior interest in receiving the included information for research and educational purposes. TMS has no affiliation whatsoever with the originator of this article nor is TMS endorsed or sponsored by the originator. “GO TO ORIGINAL” links are provided as a convenience to our readers and allow for verification of authenticity. However, as originating pages are often updated by their originating host sites, the versions posted may not match the versions our readers view when clicking the “GO TO ORIGINAL” links. This site contains copyrighted material the use of which has not always been specifically authorized by the copyright owner. We are making such material available in our efforts to advance understanding of environmental, political, human rights, economic, democracy, scientific, and social justice issues, etc. We believe this constitutes a ‘fair use’ of any such copyrighted material as provided for in section 107 of the US Copyright Law. In accordance with Title 17 U.S.C. Section 107, the material on this site is distributed without profit to those who have expressed a prior interest in receiving the included information for research and educational purposes. For more information go to: http://www.law.cornell.edu/uscode/17/107.shtml. If you wish to use copyrighted material from this site for purposes of your own that go beyond ‘fair use’, you must obtain permission from the copyright owner.

One Response to “Is Artificial Intelligence Really an Existential Threat to Humanity?”

Read more

Click here to go to the current weekly digest or pick another article:

ARTIFICIAL INTELLIGENCE-AI:

- Israel Developing ChatGPT-like Tool That Weaponizes Surveillance of Palestinians

- Two AI Chatbots Speaking to Each Other in Their Own Special Language Is the Last Thing We Need

- AI Going DeepSeek

TECHNOLOGY:

[…] Is Artificial Intelligence Really an Existential Threat to Humanity? […]